- Home

-

Case Studies

1

Google Cloud Databases Rapid Research Program

Google

How can we benefit from consistent, evaluative research with high impact answers on a compressed timeline?

Summary

While managing the pilot implementation of a rapid research program on the Google Cloud Platform’s UX Databases team, I ran usability sessions to evaluate design explorations across products.

In anticipation of a company-wide design change and system upgrade which would affect user experience across Google Cloud Platform (GCP), most of our usability studies served as a way to proactively assess the risks and potential impact of these changes. It also provided an opportunity to design for navigational consistency across products, which was previously lacking.My Role

Evaluative research: I managed all aspects of usability testing. Responsibilities included recruitment, study plan and discussion guide creation, moderating user sessions and presentation of findings.

Program management: I created a set of principles to use as a guide for selecting and testing projects based on the rapid research model. I established timelines and study protocol and templatized the process end to end.Team

UX Design Manager, Senior Product UX Researchers, UX Designers, Product Managers, Front End Engineers

Due to NDA, I cannot share project specifics. I will instead outline an example of how I tested navigational design explorations using the rapid research process.

Usability Testing

Objectives

We wanted to understand expectations around which resource users would like to be directed to after completing a task, and how they would prefer to track the progress of their operations. We tested two design explorations:

1. A more generic navigational structure to highlight any potential risks before an upcoming launch.

2. Longer term implications of a component for tracking long running operations, that would potentially be implemented across database products.

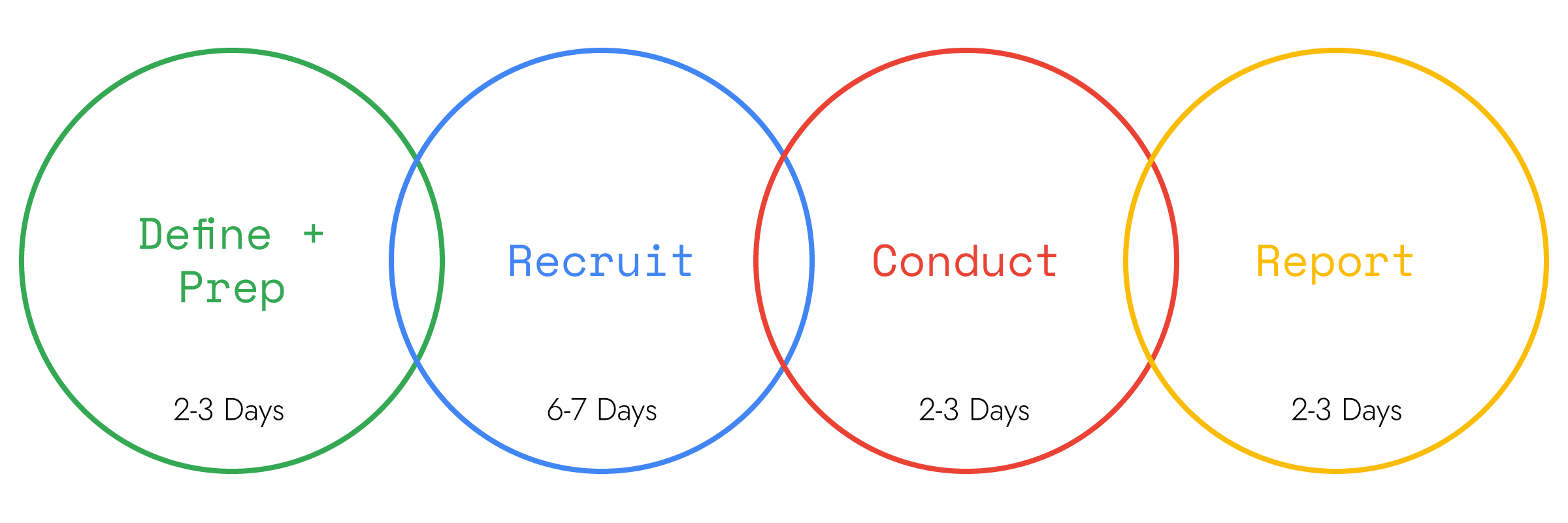

The 4 phases of rapid research.

Define and Prep

I worked with designers and the product researcher to define study goals and key questions, such as: what is the user response to the component we would like to introduce? How would either of these designs degrade the user experience, if at all?

After determining project logistics, I created the study plan with UXR oversight. I then designed a moderator guide and organized a dry run, where we could test the script alongside the prototypes to prepare for sessions.

Designers used a variety of prototyping tools for rapid research including inVision, Figma, Sketch, Axure and internal tools.Recruit

We wanted to speak with data engineers, database administrators and software architects who use the Google Cloud SQL product. I created a screener and worked closely with recruitment to identify representative users that could participate remotely.

Conduct

Participants were highly specialized database users, hailing from a range of companies from small start ups to large enterprises. After a round of introductions, where I asked them to share their background and experience with the product, we jumped into the fun part: exploring the prototype.

I gave participants a scenario and asked them to complete tasks with that scenario in mind. While they navigated the prototype, I listened closely for areas to ask follow up questions or for points of clarification. I also considered creative ways to give navigational hints, if needed.Report

I analyzed user feedback and presented verbal and written findings and recommendations to key stakeholders and the broader product team.

Outcomes

Findings were used to inform design decisions and prioritizations and engineering workload.

We found the designs created for the upcoming launch introduced no risk to users, and they have been successfully implemented.

Regarding the operations tracking component: we were encouraged by our users, who would be excited to have it as a tool, and our research was used to validate it’s necessity. It is still being tested across products.Analysis

In order to adhere to rapid research’s condensed timeline, strategic analysis is required. For me, this begins with the creation of the moderator guide. Framing the research around a scenario works for two reasons: it sets the stage for the user to accomplish goals, while providing structure for streamlined analysis.

While determining how to tell a story that will guide the participant, I am also outlining my report. Developing a template or code for findings ahead of time helps me move swiftly.Challenges

Operating in a highly specialized product space presents a special set of “what-ifs?” Especially with a small user base, where many users may not frequently (or ever) interact with the UI. Recruiting high quality participants ensures we are able to get appropriate feedback. Precise screeners and clear communication with recruiters significantly reduces risk.

There is also always the question of prototype fidelity and interactivity, which largely depends on the types of questions we’re trying to answer. Narrowly scoped questions that can be answered with a low fidelity prototype, or mocks, are perfect candidates for rapid research.

Conducting a “dry run,” where we test the prototype alongside the moderator guide before sessions begin, proves highly beneficial.Reflections

Usability testing in a highly technical space requires skill in listening three dimensionally. When armed with specific research objectives and key questions, I can simultaneously stay one step ahead, keep track of moments to return to, and focus on the present.

Designing and facilitating usability studies can feel like completing a chunk of a puzzle where all the pieces are the same color. There are fleeting moments of overwhelm, which are gleefully defeated by solid strategy; and an absolute delight when all the pieces fit together.